A new Senate bill aims to make it easier for human creators to find out if their work is being used without permission to develop artificial intelligence, marking the latest effort to combat a lack of transparency in the development of generative artificial intelligence.

The Transparency and Accountability for Artificial Intelligence Networks (TRAIN) Act will allow copyright owners to subpoena the training records of generative AI models if the owner can demonstrate a “good faith belief” that their work was used to train the model.

Developers must disclose only training material that is “sufficient to determine with certainty” whether the copyright holder’s work is being used. Failure to do so will create a legal presumption that the AI developer is actually using the copyrighted work until proven otherwise.

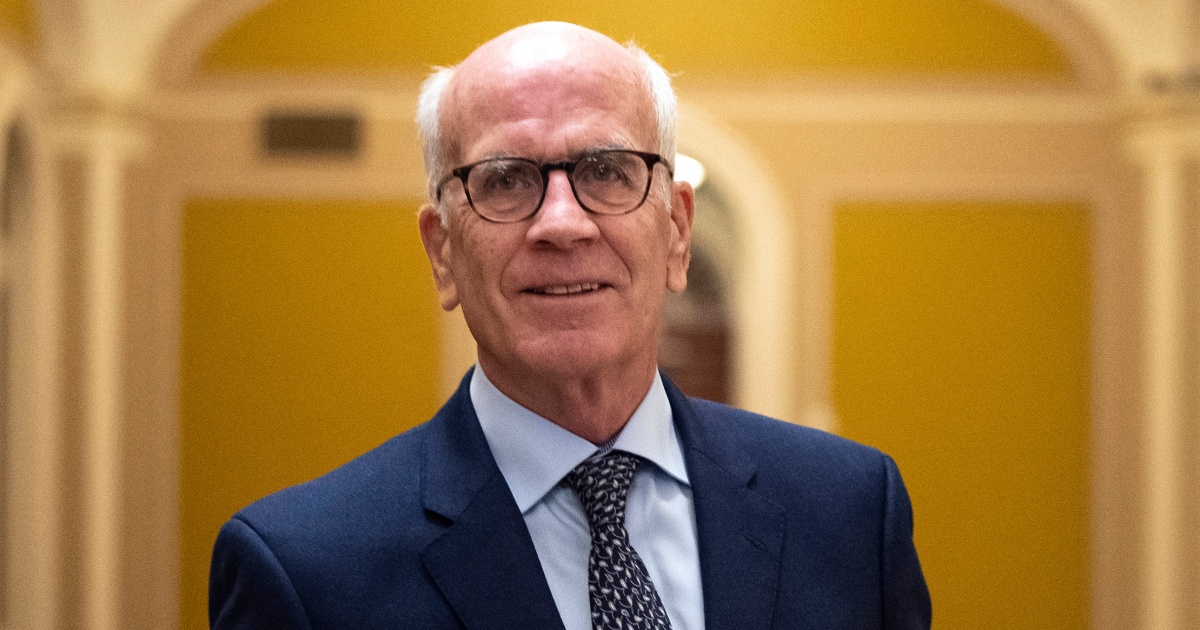

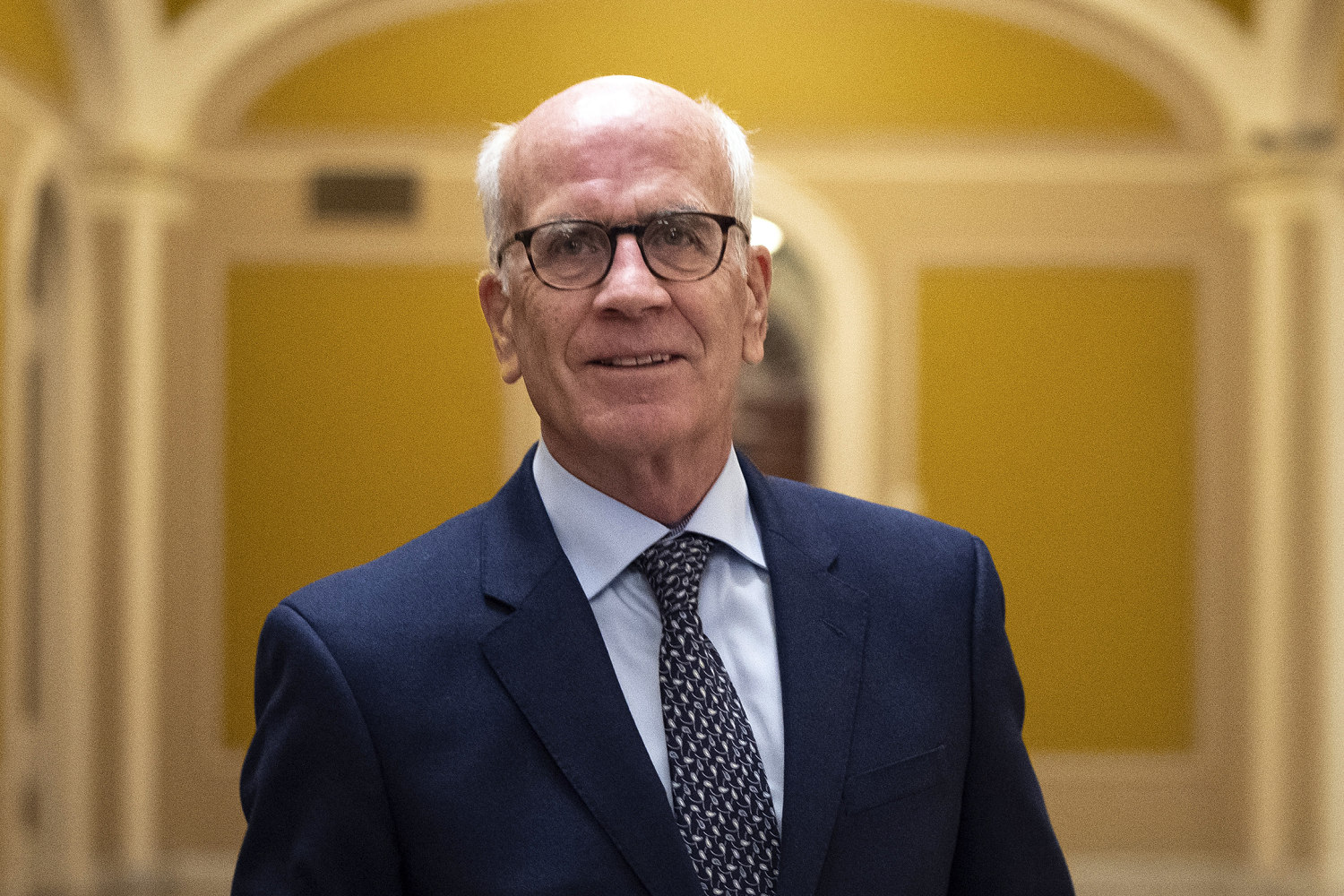

D-Vt., who introduced the bill Thursday. Sen. Peter Welch said the country must “set a higher standard for transparency” as artificial intelligence continues to integrate into Americans’ lives.

“It’s simple: if your work is being used to train artificial intelligence, you, the copyright holder, should have a way to determine that it’s being used by a training model, and if so, you should be compensated,” Welch said. statement. “We need to give America’s musicians, artists and creators a means to learn when AI companies are using their work to make models without the artists’ permission.”

The explosion of available generative artificial intelligence technologies has raised a number of legal and ethical questions for artists who fear that these tools will allow others to recreate their work without consent, credit or compensation.

Although many major AI developers do not publicly release the training data of their models, a viral Midjourney spreadsheet It gave credence to artists’ concerns earlier this year when they listed thousands of people whose work was used to develop a popular AI art generator.

Companies that rely on human creative labor have also tried to take on AI developers.

In recent years, news agencies like it The New York Times and The Wall Street Journal It has sued AI companies such as OpenAI and Perplexity AI for copyright infringement. And the biggest record labels in the world joined in June suing two popular AI music production companies, alleging that they trained their models on decades of copyrighted audio recordings without consent.

As legal tensions mount, more than 36,000 creative professionals, including Oscar-winning actor Julianne Moore, author James Patterson and Radiohead’s Thom Yorke – signed an open letter calls for a ban on the use of human art to train unauthorized AI.

Comprehensive federal legislation governing the development of artificial intelligence does not yet exist, although a number of states have attempted to introduce specific regulations related to artificial intelligence. especially around deepfakes. In September, California became law two bills aimed at protecting actors and other performers from unauthorized use of their digital likenesses.

Similar bills have been introduced in Congress, including bipartisan ones “NO FAKES” ActAimed at protecting human likenesses from non-consensual digital replications and “EU CONSENT” Lawit will require online platforms to obtain informed consent before using consumers’ personal data to train AI. So far, none of them have been able to collect votes.

In a news release, Welch said the TRAIN Act has been endorsed by several organizations Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA), American Federation of Musicians, and the Recording Academy, as well as major music labels including Universal Music Group, Warner Music Group, and Sony Music Group.

However, there are only a few weeks left in this Congress, and members are focusing on must-pass priorities like avoiding a government shutdown on December 20. Welch’s office said it plans to reintroduce the bill next year, as any pending legislation will be needed. It was re-introduced to the new Congress that convened in early January.